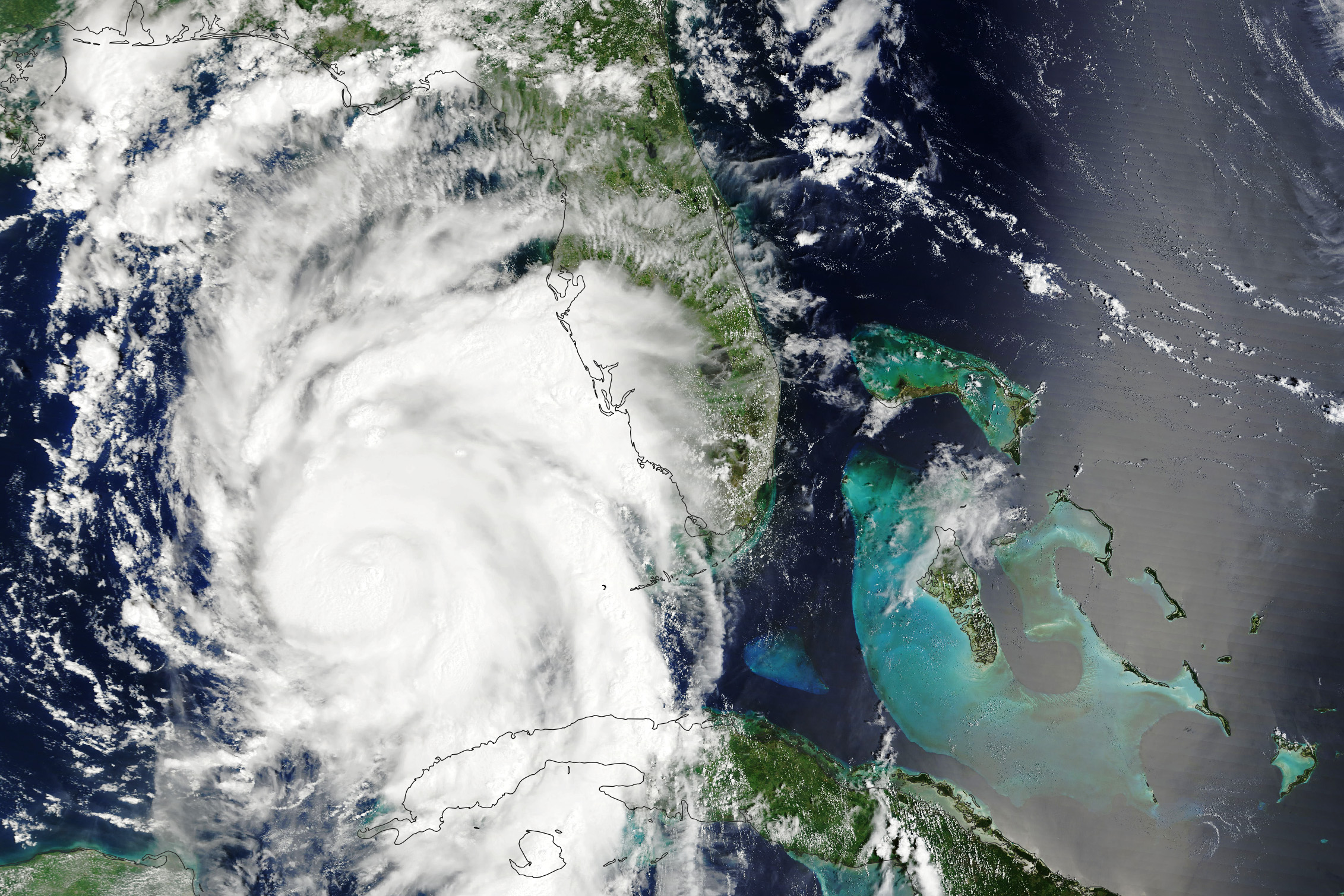

Hurricane Idalia barreled across the southeast coast of the United States.

With no intent to make light of the foreboding trauma this brings to those in its path — after all, this writer resides in Houston — let’s nevertheless recognize that this is hardly a rare or more frequent occurrence throughout U.S. history.

Nevertheless, we’ve all heard it before: more evidence that climate change is the greatest existential threat … we’re causing it through unfair excess prosperity.

The solution is to “transition” from fossil sources that supply more than 80% of U.S. and global energy to intermittent sunbeams and windy breezes that provide a total of about 3% along with highly subsidized electric vehicles that depend upon rare earth minerals mined by slave and child labor in China and the Congo which is prohibited here because it pollutes the environment.

Why?

Because we’re not only dangerously changing the climate, but the weather, and thereby also causing more frequent and severe forest fires as well.

But actually … not really.

First let’s recognize that global temperatures were as warm or warmer 2,000 years ago during the Roman Warm Period, 1,000 years ago during the Medieval Warm Period, and even a smidgen over 100 years ago in the 1930s.

That was followed by three decades of cooling which began in the mid-1940s despite World War II weapons industries having released massive amounts of CO2 into the atmosphere and had leading scientific and news organizations predicting the onset of a next Ice Age by the late ’70s.

We’re also supposed to believe that a global two-degree Fahrenheit (1 degree Celsius) temperature increase since the pre-industrial era (1880-1900) has produced alarmingly more frequent and severe weather and related wildfire events.

Recorded evidence indicates otherwise as well.

As noted by The Weather Channel a year ago, “It’s The Atlantic Hurricane Season’s Least Active Start In 30 Years” since 1992.

Whereas Hurricane Ian which had devasted large areas of Florida last year was indisputably a monster storm, a basic review of history reveals that media hype connecting it to evidence of a recent “climate change disaster” is entirely unfounded since such occurrences have been experienced with far greater frequency and damaging — sometimes fatal — consequences over the past century and before.

For a larger historical perspective, consider that North Atlantic tropical storm and hurricane patterns fail to reveal any worsening trend over more than a century.

Cat 3-4 Hurricane/Tropical Storm Harvey and Cat 4 Hurricane Irma back in 2017 ended an almost 12-year drought of U.S. landfall Cat 3-5 hurricanes since Wilma in 2005, whereas 14 even stronger Cat 4-5 monsters occurred between 1926 and 1969.

Many intense Atlantic storms formed between 1870 and 1899, 19 in the 1887 season alone, but then became infrequent again between 1900 and 1925. The number of destructive hurricanes ramped up between 1926 and 1960, including many major New England events.

As for more recent hurricanes, the 2005 and 1961 seasons shared records for their seven major U.S. landfalls since 1946, whereas 1983 set the record for the least number, with only one.

Twenty-one Atlantic tropical storms formed in 1933 alone, a record only most recently exceeded in 2005, which saw 28 storms.

Tropical cyclone Amelia dumped 48 inches on Texas in 1978; tropical storm Claudette inundated the town of Alvin, Texas, with 54 inches in 1979, emptying 43 inches in just 24 hours; and Hurricane Easy deluged Florida with 45.2 inches in 1950.

In terms of U.S. fatalities, “Superstorm Sandy” in 2012 which ravaged the northern East Coast, resulted in more than 100 deaths; the San Felipe Segundo Hurricane which struck Florida in 1928 produced an estimated 2,500 casualties; and the Galveston hurricane of August 29, 1900, may have killed up to 12,000 people.

Katrina had reached a Cat 5 hurricane level packing 175 mph wind speeds with a 20-foot storm surge in 2005 before hitting the Louisiana coast as a tropical storm which resulted in about 1,800 deaths.

Regarding alarmist tropes about global warming setting the world on fire, well-known climate writer Bjorn Lomborg points out, “It turns out the percentage of the globe that burns each year has been declining since 2001.”

Global Wildfire Information System records this year up to July 29 show that while more land has burned in the Americas than usual, much of the world — Africa and Europe in particular — have seen less, slightly below the total global average between 2012 and 2022 that previously saw some of the lowest forest losses.

Lomborg blames bad land management failures to clear dead trees and other vegetative tinder for the most expansive American fires. Even so, he notes that U.S. fires burned less than one-fifth the acreage of the 1930s, and only one-tenth as much of the early 20th century.

In any case, whether fewer or more prevalent, there’s no factual basis for attributing severe weather or wildfire occurrences to smoke stacks and SUVs.

Nevertheless, whereas we can’t change the weather, it truly is in our best interest to anticipate those bad-case circumstances and prepare our communities and households to mitigate against the outcomes.

Whether or not one such event gets hyped on the media as the “biggest ever,” “strongest ever,” “deadliest ever,” or “costliest ever,” it may qualify as the worst ever for you.

Unfortunately, it’s all too easy to forget to prepare for this on nice sunny days.

This article originally appeared at NewsMax